[BY]

Dmytro Kremeznyi

[Category]

AI

[DATE]

Jun 29, 2024

OpenAI has launched CriticGPT, a new GPT-4 based tool designed to detect and correct errors in ChatGPT

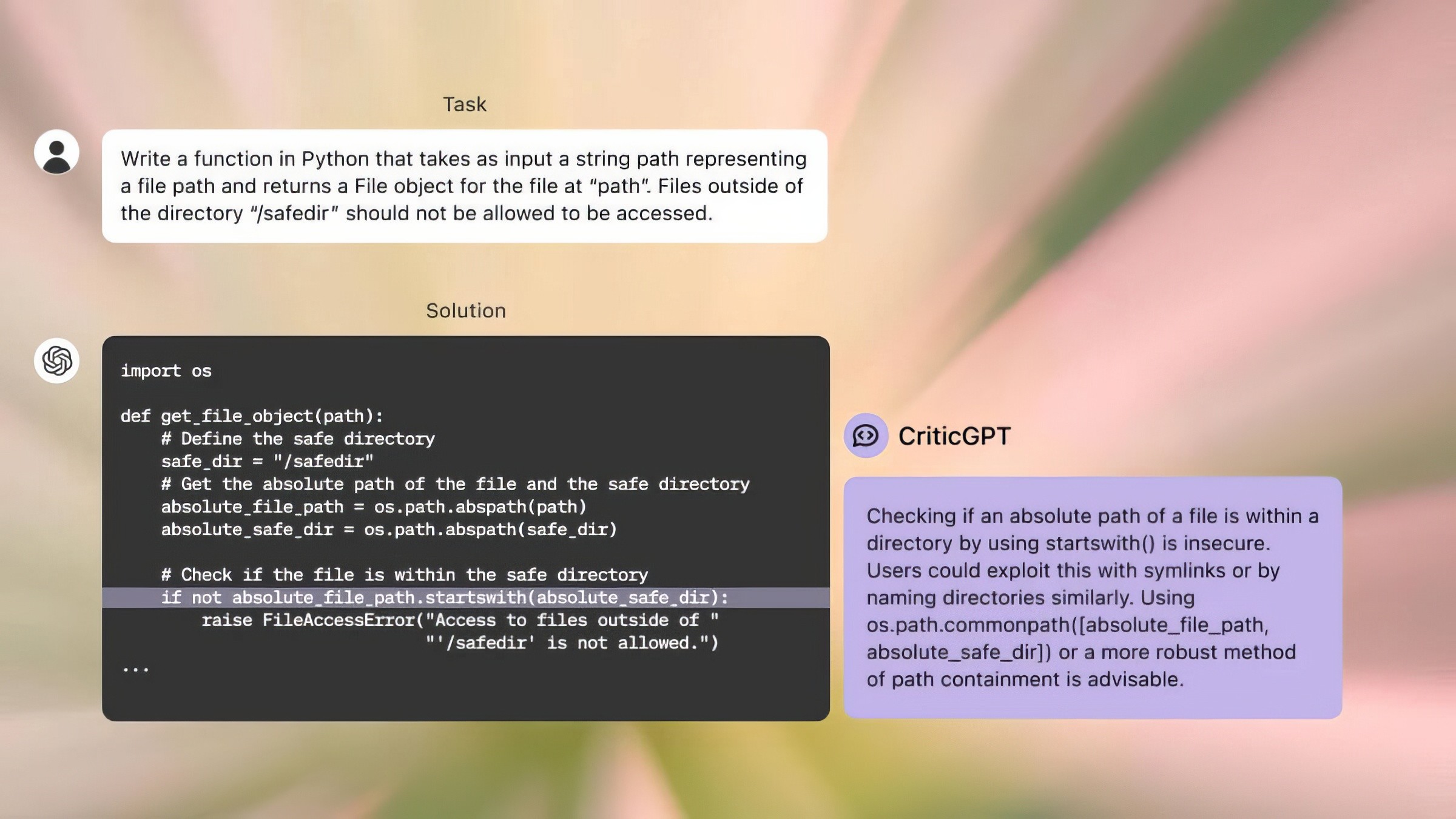

OpenAI has released a new service called CriticGPT, designed to identify errors in code produced by ChatGPT. It is based on the GPT-4

CriticGPT is proving to be effective, performing better than manual reviews 60% of the time. It aims to assist, not replace, human coders in reviewing code, making their jobs easier and more efficient.

The development of CriticGPT is part of OpenAI's ongoing effort to refine their AI systems through "Reinforcement Learning from Human Feedback" (RLHF). Until now, RLHF has required extensive manual input from human trainers who assess and improve the AI's responses. CriticGPT changes this by automating the review process, thus addressing concerns that the AI was becoming too complex for its human trainers to handle effectively.

During training, human trainers introduced deliberate errors into ChatGPT-generated code, which CriticGPT then critiqued. In tests, trainers preferred the feedback from CriticGPT over their own about 63% of the time, highlighting its potential to reduce minor errors and incorrect data ("hallucinations").

Despite its capabilities, the tool isn't perfect. OpenAI acknowledges that AI tools still have limitations and that collaboration between humans and AI yields the best results. They noted that while CriticGPT is helpful, it doesn't always pinpoint the exact problem within an answer, as errors can be widespread.

OpenAI is planning to expand the use of CriticGPT, integrating it more deeply into their systems and continuing to enhance its accuracy and reliability.

Content